The deep learning framework is a tool to help users learn deeply. Its appearance reduces the threshold for deep learning. You don't need to start coding from complex neural networks, you can use existing models as needed.

To make a metaphor, a deep learning framework is like a set of blocks. Each component is part of a model or algorithm. Users can design and assemble the blocks that meet the requirements of the relevant data set.

Of course, because of this, no framework is perfect, just like a building block may not have the kind of bricks you need, so the different frameworks are not completely consistent.

There are many frameworks for deep learning. There is no uniform standard for “good and bad†between different frameworks. Therefore, when you start a deep learning project, you can study which frameworks are usable and which framework is more suitable for you. At the time, I couldn't find a concise "instruction" to tell everyone where to start.

First, let's get acquainted with the framework of deep learning.

Caffe

Caffe is one of the most mature frameworks developed by Berkeley Vision and Learning Center. It's modular, and it's very fast, and it can support multiple GPUs with very little extra work. It uses a JSON-like text file to describe the network architecture and the solver method.

In addition, in a model zoo that can download Caffe models and network weights, it can also help you prepare samples quickly. However, it's important to note that in the Caffe framework, adjusting hyperparameters is more cumbersome than other frameworks, in part because different solvers and model files need to be defined separately for each set of hyperparameters.

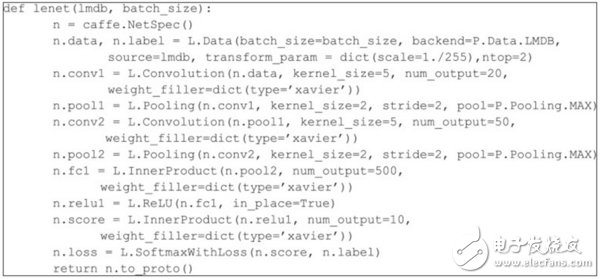

â–² LeNet CNN implementation code example written in Caffe

The above figure is a code fragment of the LeNet CNN architecture, which consists of a convolutional pooling and a 7-layer convolutional network of active layers.

Deeplearning4j

Deeplearning4j is a multi-platform framework that is developed by Andrej Karpathy and supports GPU. It is written in Java and has a Scala API. Deeplearning4j is also a mature framework (written in Lua), with many samples available on the Internet and supporting multiple GPUs.

Tensorflow

Tensorflow is a relatively new framework developed by Google but has been widely adopted. It performs well and supports multiple GPUs and CPUs. Tensorflow provides tools for tweaking network and monitoring performance, just like Tensorboard, which also has an educational tool that can be used as a web application.

Theano

Theano is a framework for creating networks using symbolic logic, written in Python, but takes advantage of numpy's efficient code base to improve performance over standard Python. Theano has great advantages in building a network, but it has a big challenge in creating a complete solution. Theano uses gradient computing used in machine learning as a "free" by-product of network creation, which may be useful for those who want to focus more on network architecture than on gradient computing. In addition, the quality of its text files is quite good.

But one thing to remind everyone is that Theano has stopped updating.

Lasagne

Lasagne is written in Python and built on the framework of Theano. It is a relatively simple system that makes network construction easier than using Theano directly. Therefore, its performance greatly reflects the potential of Theano.

Keras

Keras is a framework written in Python that can be used as the back end of Theano or Tensorflow (as shown below). This makes Keras easier to build a complete solution, and because each line of code creates a network layer, it is also easier to read. In addition, Keras has the best choice of the most advanced algorithms (opTImizers, normalizaTIon rouTInes, activation functions).

It should be noted that although Keras supports Theano and Tensorflow backends, the dimensional assumptions of the input data are different, so careful design is required to enable the code to support both backends. The project has a complete text file and provides a series of examples for various problems and a well-trained structural model for transferring the common systems of learning implementation.

At the time of writing, there was news that Tensorflow would use Keras as the preferred advanced package. In fact, this is not surprising, because Keras developer Francois Chollet itself is a software engineer at Google.

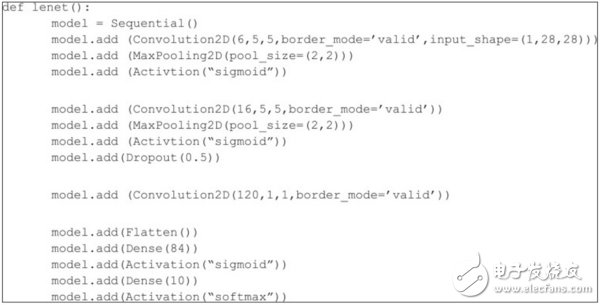

â–² LeNet CNN implementation code example written by Keras

MXNet

MXNet is a deep learning framework written in C++ with multiple language bindings and supports distributed computing, including multiple GPUs. It provides access to low-level structures as well as more advanced/symbolic-level APIs. It is considered to be comparable in performance to other frameworks such as Tensorflow and Caffe. A lot of tutorials and training examples on MXNet are available in GitHub.

Cognitive Network Toolkit (CNTK)

CNTK is a framework developed by Microsoft and described as "Visual Studio" for machine learning. For those who use Visual Studio for programming, this may be a more gentle and effective way to get into deep learning.

DIGITS

DIGITS is a web-based deep development tool developed by NVIDIA. In many ways, like Caffe, it can use text files instead of programming languages ​​to describe networks and parameters. It has a network visualization tool so errors in text files are easier to identify. In addition, it has tools for visualizing the learning process and supports multiple GPUs.

Torch

Torch is a mature machine learning framework written in C. It has complete text and can be adapted to specific needs. Because it is written in C, Torch's performance is very good.

PyTorch

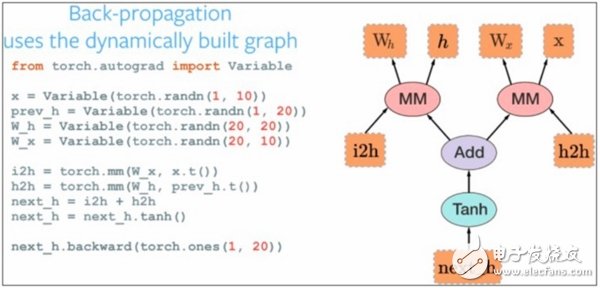

PyTorch is the python front end of the Torch calculation engine, which not only provides Torch's high performance, but also provides better support for the GPU. The developer of the framework said that the difference between PyTorch and Torch is that it is not just a package, but a deep integration framework, which makes PyTorc more flexible in network construction. (As shown below)

â–² PyTorch code example and equivalent block diagram

Chainer

Chainer is a bit different from other frameworks in that it considers network construction as part of its calculations. Its developers say that in this framework, most tools are "defined and then run", which means you have to define the schema before you can run it. Chainer attempts to build and optimize its architecture to be part of the learning process, or as a "by running definition."

other

In addition to the deep learning framework described above, there are more open source solutions focused on specific tasks. For example, Nolerarn focuses on deep belief networks; Sklearn-theano provides a programming syntax that matches scikit-learn (a major machine learning library in Python) that can be used with Theano library; Paddle Can provide better natural language processing skills...

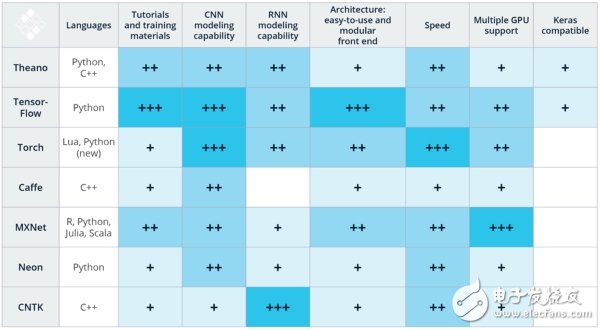

Faced with so many deep learning frameworks, how do users make the right choices? In this regard, Lexicon AI CEO and founder Matthew Rubashkin and his team through different frameworks in computer language, Tutorials and training samples, CNN modeling capabilities, RNN modeling capabilities, architecture ease of use, speed, and more The performance comparison of GPU support, Keras compatibility, etc., summarizes the following chart:

Matthew Rubashkin graduated from the University of California at Berkeley and is a Insight data engineering researcher and doctoral student at UCSF. He has worked in Silicon Valley Data Science (SVDS) and led SVDS's deep learning R&D team to conduct project research, including TensorFlow image recognition on IoT devices.

It is worth noting that this result combines the subjective experience and open benchmarking of these techniques with the Matthew Rubashkin team in image and speech recognition applications, and is only a phased test that does not cover all available deep learning frameworks. We have seen that frameworks including DeepLearning4j, Paddle, and Chainer are not yet listed.

The following is the corresponding evaluation basis:

Computer Languages

The computer language used to write the framework affects its effectiveness. Although many frameworks have binding mechanisms that allow users to access the framework in a different language than the authoring framework, the language used to write the framework inevitably affects the flexibility of later-developed languages ​​to some extent.

Therefore, when applying the deep learning model, it is best to use the framework of the computer language you are familiar with. For example, Caffe (C++) and Torch (Lua) provide Python bindings for their codebase, but if you want to use these techniques better, you must be proficient with C++ or Lua. In contrast, TensorFlow and MXNet can support multiple languages, even if users are not proficient in C++.

Tutorials and training samples

The text quality, coverage, and examples of the framework are critical to the effective use of the framework. High-quality text files and examples of pending issues will help to effectively solve developer problems. A complete documentation also indicates that the tool is mature and will not change in the short term.

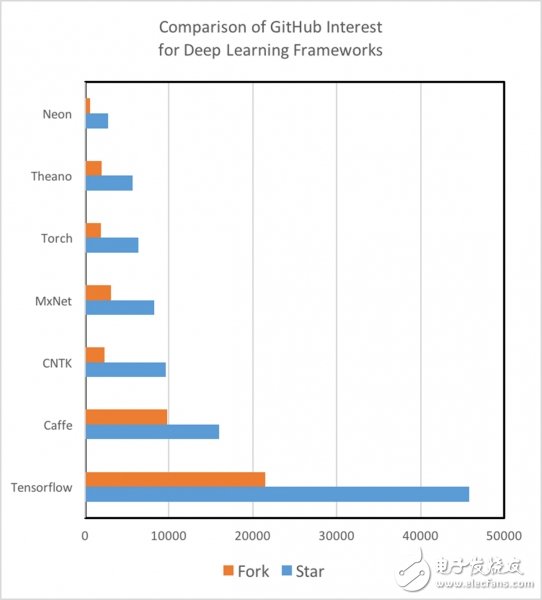

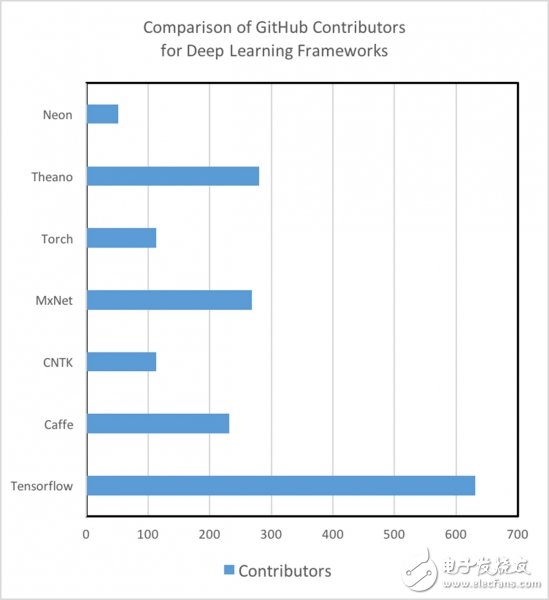

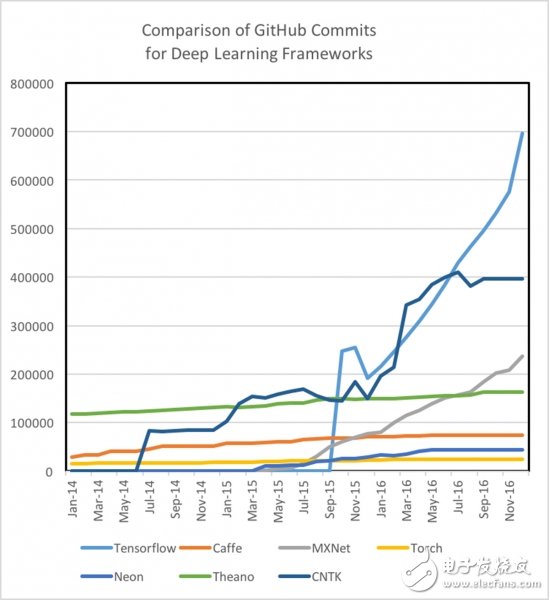

There is a big difference between the different depth learning frameworks in terms of the quality and quantity of tutorials and training samples. For example, Theano, TensorFlow, Torch, and MXNet are very easy to understand and implement because of their well-documented tutorials. In addition, we also found that the participation and activity of different frameworks in the GitHub community can not only be an important indicator of its future development, but also can be used to measure the speed of detecting and fixing bugs by searching for events by searching for StackOverflow or Git. It's worth noting that TensorFlow is very demanding in terms of the number of tutorials, training samples, and the developer and user community (like an 800-pound gorilla-like monster).

CNN modeling capabilities

A Convolutional Neural Network (CNN) consists of a set of different layers that convert the initial amount of data into an output score of a predefined class score. It is a feedforward neural network whose artificial neurons can respond to a surrounding area of ​​a part of the coverage. It has excellent performance for large image processing and can be used for image recognition, recommendation engine and natural language processing. In addition, CNN can also be used for regression analysis, such as autonomous vehicle steering angle output models and so on. CNN modeling capabilities include several functions: defining the probability space of the model, the availability of pre-built layers, and the tools and functions that can be used to connect these layers. We see that Theano, Caffe, and MXNet all have good CNN modeling capabilities, which means that TensorFlow can easily build capabilities on its InceptionV3 model, which includes easy-to-use time convolution sets. Excellent CNN resources make these two technologies well differentiated in CNN modeling capabilities.

RNN modeling capabilities

Unlike CNN, Recurrent Neural Networks (RNN) can be used for speech recognition, time series prediction, image subtitles, and other tasks that require sequential information. Since the pre-built RNN model is not as much as CNN, if you have an RNN deep learning project, it is important to consider which RNN model is pre-implemented and open source for a particular technology. For example, Caffe has very few RNN resources, while Microsoft's CNTK and Torch have rich RNN tutorials and pre-built models. Although TensorFlow also has some RNN resources, the RNN examples included in TFLearn and Keras are much more numerous than using TensorFlow.

Architecture

In order to create and train new models in a specific framework, it is essential to have an easy-to-use and modular front-end architecture. Test results show that TensorFlow, Torch and MXNet all have an intuitive modular architecture that makes development simple and intuitive. In contrast, a framework like Caffe takes a lot of work to create a new layer. In addition, we have found that TensorFlow is especially easy to debug and monitor during and after training because the TensorBoard Web GUI application is already included.

speed

In the open source convolutional neural network (CNN), Torch and Nervana have the best performance records for benchmarking. TensorFlow performance is also "something" in most tests, while Caffe and Theano perform in this respect. Not prominent; in terms of recurrent neural networks (RNN), Microsoft claims that CNTK has the shortest training time and the fastest speed. Of course, another study that directly compared the speed of RNN modeling capabilities showed that Theano performed best in Theano, Torch, and TensorFlow.

Multi-GPU support

Most deep learning applications require a lot of floating point arithmetic (FLOP). For example, Baidu's DeepSpeech recognition model requires 10 seconds of ExaFLOPs (Millions of Floating Point Operations) for training. That is the calculation of the 18th power of more than 10! As a leading graphics processing unit (GPU), such as NVI's Pascal TitanX, it can perform 11 trillion floating-point operations per second, and it takes a week to train a new model on a large enough data set. To reduce the time required to build a model, multiple GPUs on multiple machines are required. Fortunately, most of the technologies listed above provide this support. For example, MXNet has a highly optimized multi-GPU engine.

Keras compatibility

Keras is an advanced library for rapid deep learning prototyping and a tool for data scientists to apply deep learning freely. Keras currently supports two backends - TensorFlow and Theano, and will also receive formal support in TensorFlow.

Matthew Rubashkin suggests that when you start a deep learning project, you first need to assess your team's skills and project needs. For example, for an image-recognition application for a Python-centric team, he recommends using TensorFlow because of its rich text files, good performance, and excellent prototyping tools. And if it is to extend RNN to Lua-capable customer team products, he recommends Torch because of its superior speed and RNN modeling capabilities.

All in all, for most people, writing a deep learning algorithm from scratch is very costly, and using the vast resources available in the deep learning framework is more efficient. How to choose a more appropriate framework will depend on the user's skills and background, as well as the needs of specific projects. So when you start a deep learning project, it's worth spending some time evaluating the available framework to ensure the maximum value of the technology.

A tablet computer is an electronic device that integrates mobile commerce, mobile communication and mobile entertainment, with a touch recognition LCD screen, handwriting recognition and wireless network communication functions. At present, the tablet computer has become the most popular electronic product.

1.In appearance, the tablet computer looks like a large-screen mobile phone, or more like a separate LCD screen.

2.In terms of hardware configuration, a tablet computer has all the hardware devices of a traditional computer, and has its own unique operating system, compatible with a variety of applications, and has a complete set of computer functions.

3.Tablet PC is a kind of miniaturized computer. Compared with traditional desktop computers, tablet computers are mobile and flexible. Compared with notebook computers, tablet computers are more compact and more portable.

4.Tablet PC is a digital notebook with digital ink function. In daily use, you can use the tablet computer like an ordinary notebook, take notes anytime and anywhere, and leave your own notes in electronic texts and documents.

Tablet Pc Pad,Mobile Tablet,Scratch Pad Tablet Pc,Tablet Pc,Tablets & Presentation Equipment,Educational Tablet

Jingjiang Gisen Technology Co.,Ltd , https://www.jsgisentec.com