Readers should be familiar with artificial intelligence, but to understand its history, we must first return to 1995.

At that time, a young Frenchman, Yann Lecun, spent more than 10 years doing one thing: imitating some of the brain's functions to create artificial intelligence machines. This has been a bad idea for many computer scientists, but Lecun's research has shown that this approach can produce intelligent and truly useful products.

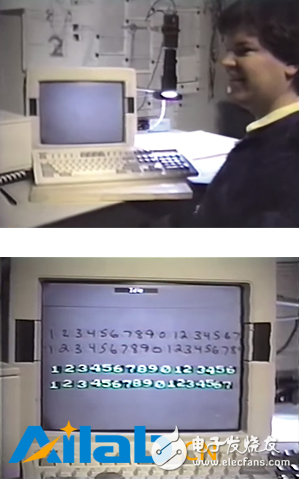

During his time at Bell Labs, he developed software for simulating neurons to identify handwritten text by reading different examples. AT&T, the parent company of Bell Labs, also used this technology to develop the first machine that can read checks and writing strokes. For the believers of Lecun and artificial neural networks, this seems to be the beginning of a new era, marking that machines can learn skills that were previously only human.

It’s just that for Lecun, the success of this achievement is also the time when the project is coming to an end. In order to open up different markets, AT&T announced the spin-off into three different companies. They planned to let Lecun do some other research, so he left to go to New York University to teach. At the same time, researchers elsewhere have found that they can't apply Lecun's breakthroughs to computational problems, and the enthusiasm for applying brain simulations to AI research is beginning to diminish.

Although for the 55-year-old Lecun, he never stopped exploring the pace of artificial intelligence. After being ruthlessly rejected for more than 20 years, Lecun et al. finally achieved amazing achievements in the fields of face and speech recognition. Today's well-known deep learning in the field of technology has become a new battleground for technology companies such as Google, and they are eager to apply it to business services. Facebook hired Lecun in 2013 to manage FAIR, an artificial intelligence research team of more than 50 people. For Facebook, Lecun's team is the first underlying research investment that may allow the company to emerge from the positioning of social networks and is likely to give us a new understanding of what the machine can do.

The media has repeatedly reported that companies such as Facebook have been eager to devote themselves to this field in recent years, mainly because in the field of computer graphics, deep learning technology is much more advanced than previous AI technology. In the past, researchers had to write a lot of programs to give machine capabilities, such as detecting lines and right angles in pictures. But deep learning software can understand and use the data by itself, without the need for such a program. Based on the theory developed by this theory, the accuracy is already comparable to that of human beings.

Now Lecun is working on something more powerful, and he intends to give the software the language skills and common sense to complete the basic dialogue. When searching, we can tell the machine directly what we want, just like communicating with people, without thinking about what to enter for retrieval. Deep learning gives the machine the ability to understand and communicate, identify and answer questions and give us advice. One of its applications is to understand our requirements and to book restaurants in place of us, and it is likely to revolutionize the gaming industry.

According to Lecun, these systems not only have to help humans complete their tasks, but also need to know why. Today's search engines, anti-spam systems, and virtual assistants don't do this either. Most of them just use techniques such as keyword matching to complete tasks, ignoring the order in which words appear. Take Siri as an example. It only searches for the content that meets your requirements in several types of response libraries, but in fact it does not understand what you mean. And the IBM mainframe Watson, who had previously defeated humans in the Jeopardy game, mastered language proficiency through highly programmed rules, but it could not be applied to other situations.

Conversely, deep learning software can master the ability to understand language as humans do. The researchers tried to give the machine the ability to understand vocabulary. The system developed by Lecun et al. can answer questions, perform logical reasoning, etc. after reading some simple stories.

However, Lecun et al. know that the field of artificial intelligence is always a small thunderstorm. People initially thought that a huge breakthrough could be made, and in the end it might be a small step. To make a machine handle complex language problems is much more complicated than image recognition. There is no doubt that deep learning has a lot to do in this field, but whether they can really master the language and change our lives is still an unknown.

Deep history

If you want to trace it back, the history of deep learning is much earlier than the years when Lecun worked at Bell Labs. He and others actually only revived a long-awaited idea.

Time back in the 1950s, in order to explore how intelligence and learning are produced, and how signals are transmitted between neurons in the brain, biologists have come up with some simple theories. The core point is that if cells communicate frequently, the connections between neurons will increase. When new experiences are created, this transfer will adjust the structure of the brain so that it will be better understood the next time you experience similar things.

Psychologist Frank Rosenblatt used this theory in 1956 to simulate neurons in combination with software and hardware. He developed a set of perceptrons to easily classify pictures. Although he was the idea of ​​being implemented on a clumsy mainframe, he laid the foundation for today's artificial neural networks.

The computer he built was connected by a large number of motors and optical monitors, with a total of eight virtual neurons. First, the monitor detects the optical signal of the picture and passes the signal to the neuron. These neurons, after acquiring the signal from the monitor, process it and return a value. With these values, the machine can paint what it sees. The initial test results were terrible, but Rosenblatt used an advanced learning approach that allowed it to correctly distinguish between different shapes. When he displays the picture to the machine, he will also tell the correct answer. After that, the machine will judge the input signal and calculate the signal weight of each neuron if it is to get the correct answer, and then redistribute and correct it. After repeating a lot of examples, the machine can recognize images that have never been seen before. Today's deep learning networks use more advanced algorithms and have millions of simulated neurons, but the training is the same as before.

Rosenblatt predicts that his perceptron will have a wide range of applications, such as allowing the machine to greet with names and people. If people can pass pictures and signals between multiple layers of a neural network, the perceptron can solve more complex problems. Unfortunately, his learning algorithms don't work in multiple layers. In 1969, Marvin Minsky, a pioneer in the field of AI, published a book that killed people's interest in neural networks in the cradle. Minsky claims that multidimensionality does not make the perceptron more useful. So AI researchers abandoned this idea and replaced it with logic operations to develop artificial intelligence products, while neural networks were pushed to the edge of computer science.

When Lecun studied in Paris in 1980, he discovered the work of the people before, and was surprised at why he abandoned the idea. He looked for relevant papers in the library and finally found a group in the United States studying neural networks. What they studied was the old problem that Rosenblatt encountered, how to train neural networks and make them resolutely multi-layered. This study has some underground work meaning, in order not to be rejected by the reviewers, the researchers try to avoid using the words nerves, learning and so on.

After reading this, Lecun joined the team. There he met Geoff Hinton, who now works for Google. They agreed that only artificial neural networks are the only way to build artificial intelligence. Since then, they have successfully developed neural networks for multi-layered surfaces, but their applicability is very limited. Bell Labs researchers have developed another set of more practical algorithms that were quickly applied to anti-spam and product recommendations by companies such as Google and Amazon.

After Lecun left Bell Labs to go to New York University, he and other researchers formed a research group. To prove the role of neural networks, they quietly let powerful machines learn and process more data. Lecun's handwriting recognition system consisted of five neuron layers, and now it is added to more than 10. After 2010, neural networks defeated existing technologies in areas such as image classification, and large companies such as Microsoft began to apply them to speech recognition. But for researchers, neural networks are still a very marginal technology. In 2012, Lecun also wrote an anonymous letter to blame it, because their article submission on the new record of neural networks was rejected by a top conference.

One thing after 6 months, everything has changed.

Hinton took two students and participated in a machine image recognition competition and achieved impressive results in the competition. The network they used in the game was similar to the check-reading network developed by Lecun. In this game, the software identified more than 1,000 kinds of items, and their system recognition rate was as high as 85%. The second place is 10 percentage points. The first layer of deep learning software optimizes neurons to find simple features such as corners, while other layers continuously look for shapes. Lecun can now recall the scene at the time. As the winners, they took out the papers, as if they were slap in the face of people in the house who had ignored their research, and they can only say: OK, we admit You won.

After this battle, the wind direction in the field of computer vision quickly changed, people quickly abandoned the old methods, and deep learning quickly became the mainstream of artificial intelligence. Google bought a company founded by Hinton to develop Google Brain. Microsoft also began researching the technology. Facebook CEO Zuckerberg even appeared at the Neural Network Research Conference, announcing that Lecun joined the FAIR team while attending a faculty position at New York University.

In 1993, Lecun was at Bell Labs, and the computer next to it recognized the handwritten numbers on the check.

Facebook's new office is only a 3-minute drive from the place where Lecun teaches, where he works with researchers to try to make neural networks better understand the language. The specific method is that the neural network retrieves the document back and forth. When a word is encountered, the content before and after the word is predicted, and then the actual situation is discriminated. In this way, the software solves each word into a set of relationship vectors with other words.

For example, in the neural network, the vector relationship between the king and the queen is the same as that of the husband and wife. For the whole sentence, this method can also work. Some research results show that machines using vector technology even surpass humans in understanding tests such as synonyms and antonyms.

Lecun's team has gone further. They believe that the language itself is not complicated. What is really complicated is that it has a deep understanding of the language and a common sense. For example, Xiao Ming took the bottle out of the room. The implication of this sentence is that the bottle is on Xiao Ming. In view of this, the neural network they developed is equipped with a memory network to store some facts that it has learned, and it will not be cleared every time there is new data input.

Facebook AI researchers have developed a system that answers simple questions, even if some of them are not encountered before. For example, the researchers gave the memory network a sketch of the Lord of the Rings and asked it to answer some simple questions, such as where is the Lord of the Rings? Although it may not have encountered the word Lord of the Rings before, it can still answer. If it can understand some more complicated sentences, then there will be a lot of applications.

However, it has taken a lot of effort to build a system that can complete a limited dialogue, not to mention the poor reasoning ability of neural networks, no matter how planned. Although researchers have not found a more efficient solution, researchers such as Lecun are still full of confidence.

But not everyone is so optimistic. Oren Etzioni, CEO of a research institute in Seattle, believes that deep learning software is now only showing the simplest part of language recognition. They still lack the ability to reason logically. This is now done with neural networks. The classification of graphics and the analysis of sound waves are all very different. In addition, mastering the language is not that simple, because the meaning of the sentence in the text may change. To make the software have language proficiency, you need to be able to grasp the meaning of the sentence without a clear indication like a baby.

Deep faithIn Facebook's CTO Mike Schroepfer, they hope to see Facebook's system communicate with you in the future, just like a human housekeeper. The system understands languages ​​and concepts at a higher level: for example, you can ask for a photo of a friend instead of his dynamics. As Lecun's system masters higher reasoning and planning capabilities, this is still possible in the short term. In addition, Facebook may also provide something that they think you will be interested in, and ask your opinion, and finally let this super housekeeper immerse in the ocean of information.

Not only that, but improvements in this communication algorithm can also improve Facebook's ability to filter information and advertising, which is critical for Facebook's desire to go beyond social networks. As Faebook begins to publish information as a media, people need better ways to manage information. This virtual assistant can help Facebook achieve this ambition.

If deep learning repeats the mistakes of previous artificial intelligence, then these may never happen. However, Lecun is full of confidence. He believes that there is enough evidence to stand on his side, indicating that deep learning will eventually bring huge reports. Letting the machine process the language requires new ideas, but as more and more companies and universities join the field, the original small day begins to have unlimited possibilities, which will greatly speed up the entire process.

It is still unclear whether deep learning can achieve the stewards of Facebook's expectations. Even if it is truly realized, how much people can benefit is still unimaginable. But maybe we don't have to wait too long. He firmly believes that those who doubt the machine's ability to learn language deeply will regret it. This is the same as before 2012. Although things have changed, the people who use the old methods are still stubborn. Maybe after a few years, people won’t see it that way.

8GPU B85-Pro Mining Rig(Brown)

Easy Electronic Technology Co.,Ltd , https://www.pcelectronicgroup.com