This article compares and analyzes:

Preempt_disable()

Local_irq_disable()/local_irq_save(flags)

Spin_lock()

Spin_lock_irq()/spin_lock_irqsave(lock, flags)

Which closed off? In addition, let's say clearly, and who took off again.

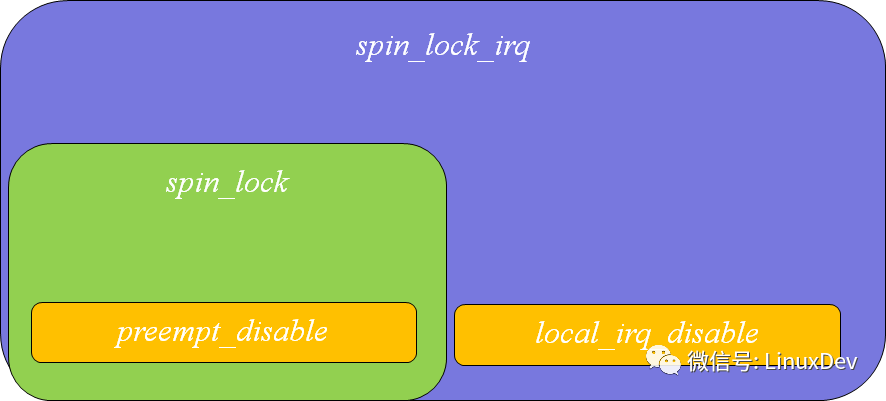

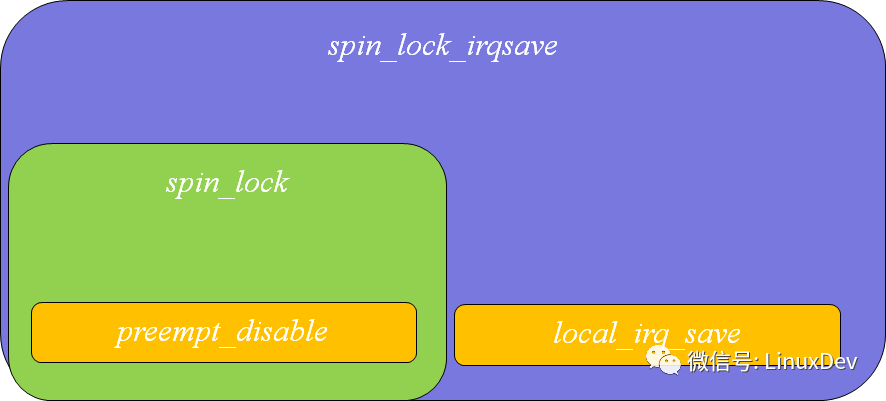

First of all, outline the diagrams of these APIs.

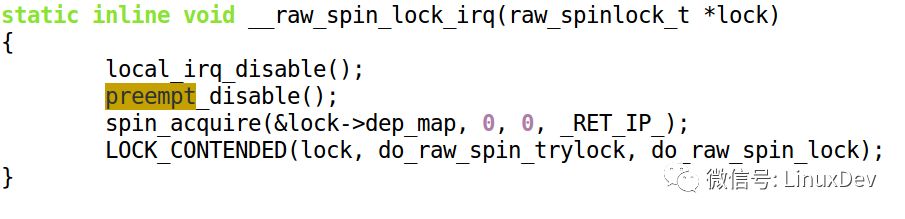

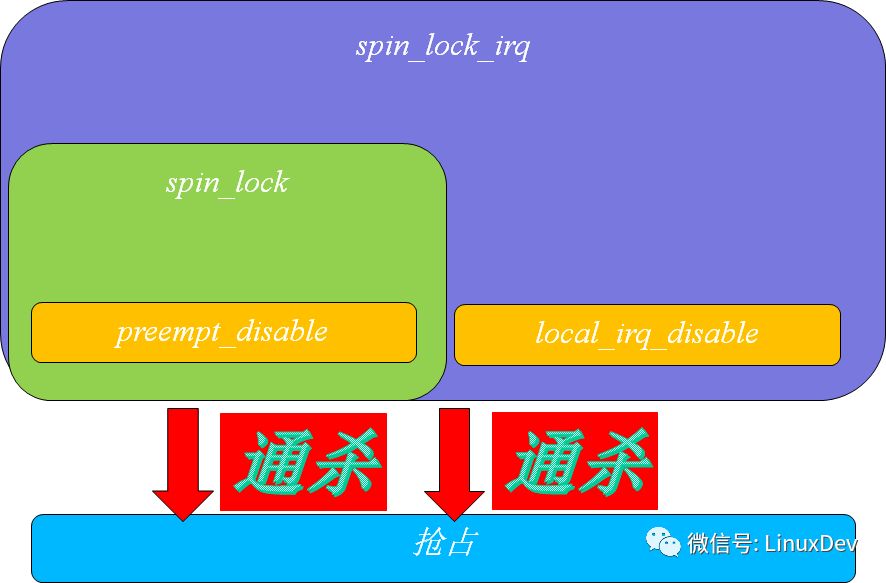

We understand that spin_lock() will call preempt_disable() to cause the preemption schedule of this core to be closed (preempt_disable function actually increases preempt_count to achieve this effect), and secondly we understand that spin_lock_irq() is the fit of local_irq_disable()+preempt_disable().

The following figure describes the inclusion of several functions as follows:

The only difference between the disable and save versions of local_irq_disable()/local_irq_save() is that you don't want to save the CPU's blocking status.

The only difference between spin_lock_irq()/spin_lock_irqsave(lock, flags) is whether or not to save the CPU's masked state.

Who killed it?

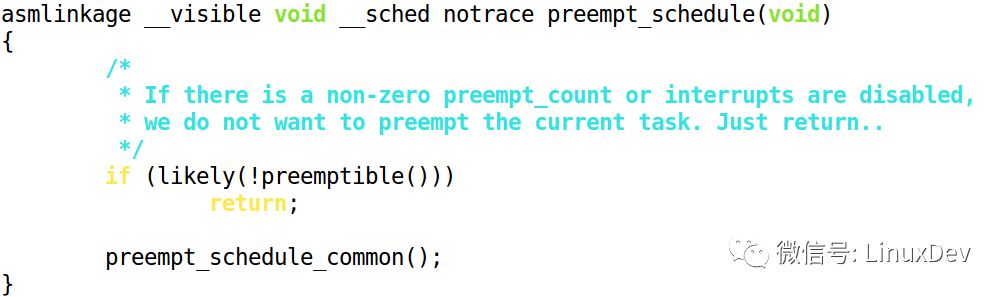

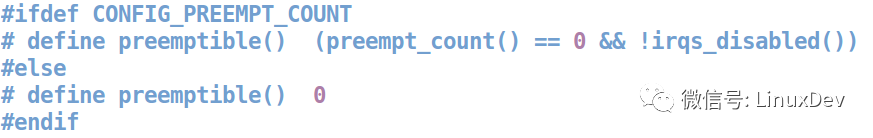

The Kernel code clearly shows that when the preemption schedule is executed, "non-zero preempt_count or interrupts are disabled" is also detected:

We can further expand preemptible():

For ARM processors, judging irqs_disabled() is actually determining if the IRQMASK_I_BIT in the CPSR is set.

Therefore, we come to a conclusion that all the functions listed in the preface section can close the preemption schedule of this core. Because, regardless of the preempt_count count status, or interrupt is closed, it will cause the kernel to think it can not preempt!

The killing logic is as follows:

Where is the difference between killers?

Since they have closed the preemption, where is the difference?

Let's look at two pieces of code, assuming that the following code occurs in a normal process with a NICE of 0:

Preempt_disable()

Xxx(1)

Preempt_enable()

with

Local_irq_disable()

Xxx(2)

Local_irq_enable()

First, xxx(1) and xxx(2) cannot be preempted. One gets preempt_count and one gets an interrupt.

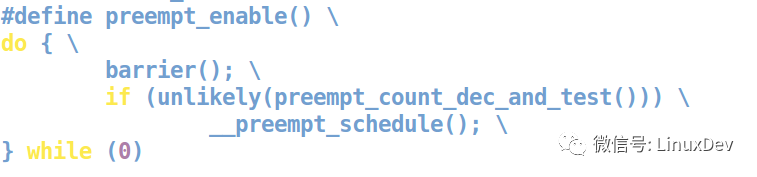

But suppose xxx(1) wakes up a high-priority RT task, then at the moment of preempt_enable(), it is a preemption point directly. At this time, schedule, high-priority RT tasks come and go; assume xxx(2) Awakening a high-priority RT task internally, then at the moment of local_irq_enable(), it is not a preemption point and the task of the high-priority RT must wait for the next preemption point. The next preemption point may be clock tick processing return, interrupt return, soft interrupt end, yield() and so on.

In preempt_enable(), a preempt_schedule() is executed:

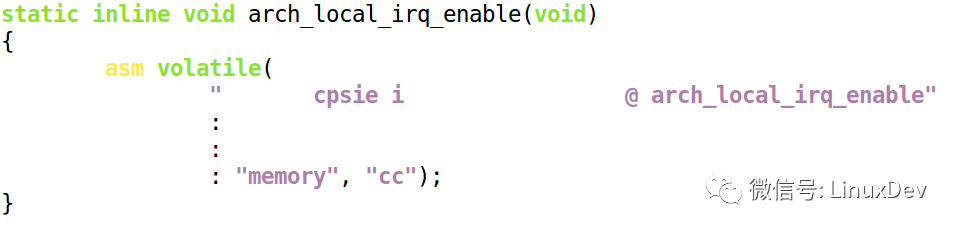

Local_irq_enable() simply turns on the CPU's response to the interrupt. For ARM, it is:

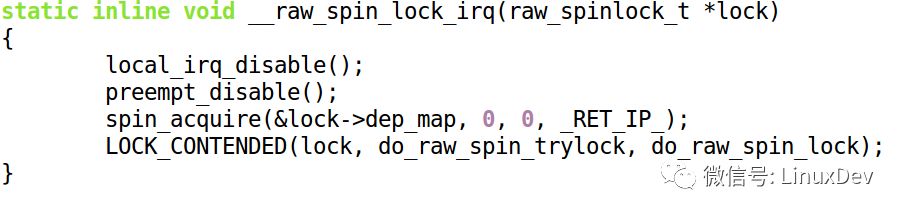

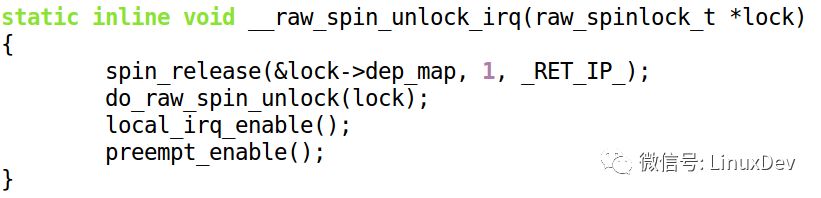

Looking at the big boss again, spin_lock_irq calls both preempt_disable and local_irq_disable:

The corresponding spin_unlock_irq() calls both local_irq_enable() and preempt_enable():

Think about it, why does preempt_enable() happen after local_irq_enable()? If the code order is this:

Preempt_enable()

Local_irq_enable()

The first sentence preempt_enable() wants to execute a preemption schedule. Even if preempt_schedule() is called, because the IRQ is still closed, the preempt_schedule() function returns immediately (see the section “Who Killed Preempt?â€), so Preemption; the next sentence local_irq_enable() does not perform preemption scheduling. So, if you do this,

Spin_lock_irq()

Xxx(3)

Spin_unlock_irq()

If xxx(3) wakes up the high-priority RT, at the moment of spin_unlock_irq(), it will not be able to directly seize!

Fortunately, the real order is:

Local_irq_enable()

Preempt_enable()

So, at the moment of spin_unlock_irq(), the RT process is swapped into execution.

Look at the smaller boss, spin_lock():

Spin_lock()

Xxx(4)

Spin_unlock()

If xxx(4) wakes up the RT process, it will immediately steal at the moment of spin_unlock(). Because spin_unlock() calls preempt_enable().

And who took and killed?

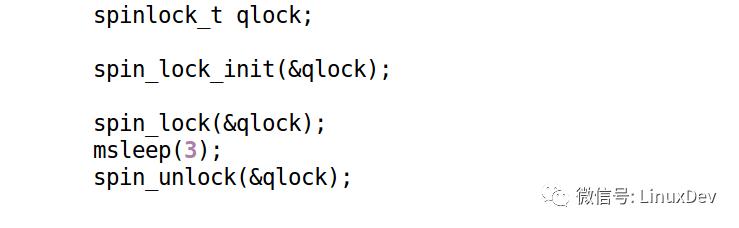

In theory, there is no complete shutdown of the scheduler, because the process can still sleep on its own:

The above code calls msleep() inside the spin_lock range. This time, it is not preempted. Linux will pick the next task to run.

However, such code generally has huge risks in the later period, leading to inexplicable collapse in the later period. Therefore, in the actual project, we are strictly prohibited.

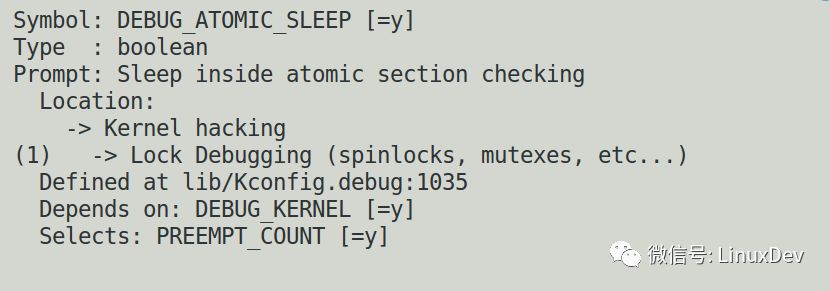

It is recommended that you open the DEBUG_ATOMIC_SLEEP inside the config inside the Kernel. Once this happens, let the kernel report the error.

In this case, the kernel detects that someone is sleeping in an atomic context and reports back to the execution stack.

Stainless Steel Easy Car Stick

Stainless Steel Easy Car Stick,Stainless Steel Solid Square Rod,Steel Threaded Rod,Stainless Steel Threaded Bar

ShenZhen Haofa Metal Precision Parts Technology Co., Ltd. , https://www.haofametals.com