From flying birds to airplanes, bats to radars. Every human tribute to nature can bring us into a new era. Artificial intelligence, it is precisely to pay tribute to the king of nature - humans.

Since the day of the computer, people have never interrupted the machine to have a dream of wisdom. On this road, there is a master who is widely respected by the world academic community. He is the chief scientist from 360 and internationally renowned computer vision and deep learning expert Yan Shuicheng.

He not only led the research direction of computer vision several times in the international field, but also carried out a lot of forward-looking practice, let the computer imitate the baby's brain's operation mode, and observe and learn the world step by step.

At the 2016 CCF-GAIR Global Artificial Intelligence and Robotics Summit, Yan Shuicheng accepted an exclusive interview with Lei Feng Network and shared his insights on artificial intelligence and computer vision.

360 Chief Scientist, Dean of 360 Institute of Artificial Intelligence Yan Shuicheng

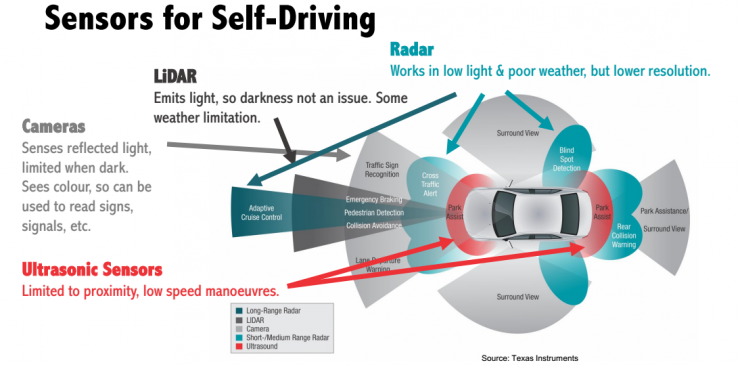

1, on the side of the smartMany current artificial intelligence rely on cloud computing, but some scenarios are not suitable for the cloud. E.g:

Tesla’s automatic driving technology, after image acquisition for the road conditions in front of the road, will obviously delay if it is sent to the cloud for processing. When the results are returned to the local area, the timing of the processing is often lost and the significance is lost.

In the live broadcast service, a real-time technology is added to the maincast, such as a virtual earring for the maincast. In this scenario, if the results are pulled back from the server, there will be a delay. Since the anchor is in motion, there is no way to accurately align and the experience will be poor.

Some smart cameras provide a function: Check if there are suspicious people entering the monitoring area. If the device is sold at a large scale and a large number of users use this function at the same time, if the server side performs the calculation, it will cause great pressure on the server.

There are many applications for smart devices that have no network and cannot interact with the server.

Tesla automatic driving schematic

In these scenarios, if the computing task is directly delivered to the smart device terminal, these bottlenecks will be solved.

However, putting operational loads on equipment clearly has many problems to overcome. One of the biggest ones is the ceiling of computing power of the terminal equipment. (Since the cost of smart devices is limited, most of the top-level computing chips are not possible.)

To solve this problem, there are two directions:

1, reduce the accuracy of the operation. For example, recognizing human face and age at the mobile phone end, accuracy is inevitably difficult to compare with a professional identification system. But this loss of precision from 95% to 85% is something people can afford.

2, improve the calculation model. Of course, the best situation is to be able to develop new and better computing models. However, under the existing model, it is also possible to implement algorithm simplification by improving the strategy. For example, Yan Shuicheng led the team to study some algorithm adjustments and added some strategies for judging those logics without calculations based on the original algorithm. Although it seems that the rules are more complicated, the overall amount of computation is reduced.

Yan Shuicheng believes that the optimization of these strategies is very meaningful because costs are often an important factor in achieving commercialization.

2, artificial intelligence dedicated computing chipAt present, the most mainstream artificial intelligence chips use GPUs. However, GPUs have large volumes and high energy consumption and cannot be used in mobile phones and other devices. It seems that smarter CPUs with slightly better performance are used on mobile phones or hardware.

From the current point of view, there is no deep learning chip developed specifically for end equipment on the market. The Chinese are also trying to give special chips in this area.

For example, the "Cambrian" chip of the Institute of Computing Technology, Chinese Academy of Sciences, and Horizon Robotics, which was created by Yu Kai, former director of the Baidu Institute of Deep Learning, are all trying to create such specialized chips.

Cambrian chip

Because chip production is a very heavy industry. It takes from one year to one and a half years for a chip to flow from mass production to production, costing millions of dollars. If you can't sell on a large scale, you will have uncontrollable costs.

It is a good idea to use various methods to reduce the amount of computation of the end-chip before a mature chip is available.

When you see a person's photo, many stories about him will automatically come to your mind. This is the wonder of the human brain.

Brain-like research has always been an advanced research direction in artificial intelligence. In simple terms, it is necessary to accurately grasp the working principle of the human brain and use this same principle to design a deep learning network.

However, Yan Shuicheng said that the development of human brain research has not been imaginative. However, he very much appreciated the computational model inspired by the human brain.

For example, when a person sees an object, the object continuously impacts the retina. This signal, like flowing water, reaches a new balance in various parts of the nerve.

This is like a network of water pipes. At the entrance pressure, it is deduced in layers, causing the pressure of each node of the entire network to change at the same time.

"For the brain, an image that powers up the entire system will cause all memories and knowledge associated with it to appear instantaneously."

This efficiency will be much higher than the linear calculation used by current artificial intelligence.

Although this model sounds wonderful, there are still many problems with the actual solution of the equation. However, Yan Shuicheng stated that in this direction, there may be new solutions in the future.

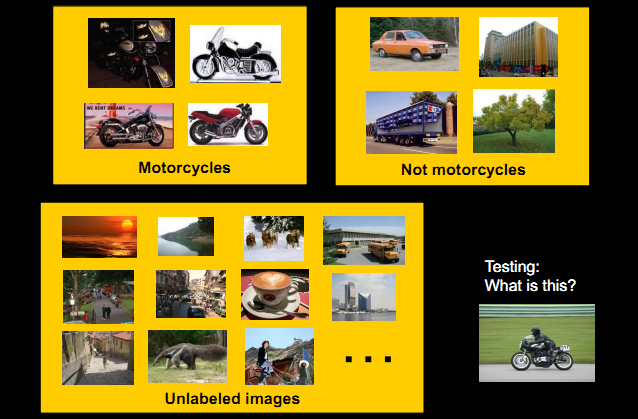

Unsupervised learning and self-learningThe current deep learning, without exception, requires a lot of data. For example, if you want the machine to accurately identify a dog, you need to let the system “see†thousands of dogs first. But this is obviously different from human learning.

A little child may only need to see one or two dogs and you can get to know all the dogs in the world.

Unsupervised learning

From this point of view, "small samples," "unsupervised," and "self-learning" are aspects of human victory over machines. Is it possible for the machine to achieve small sample unsupervised learning and self-learning?

This may have to return to the moment when everyone first met the world.

Yan Shuicheng said:

According to some studies, each child’s initial visual learning begins with the moving object. Babies, like frogs, can only identify moving objects. Because the object has only motion, it can divide the blocks of the world before the eyes. From this point of departure, there is the concept of an object. This is followed by a small sample of learning and self-learning.

Yan Shuicheng is very interested in this doctrine, which actually shows a new entrance to machine vision: video.

From the video cut in, find the password for human and machine learning. This is one of the ongoing tasks of Yan Shuicheng’s team.

5. Two identification systems for the human brainDo you have such an experience? When you see someone, you are sure that you know Ta, but you can't remember Ta's name?

This phenomenon unique to the human brain inadvertently reveals the great secret of a personal brain operation.

Can't always remember the other person's name

Yan Shuicheng shared a new hypothesis he recently learned about Lei Fengwang (searching for "Lei Feng Net" public number) :

This may indicate that the human brain is divided into two sets of identification systems: parameter models and non-parametric models.

For uncommon people or objects, the brain will choose to place them in a non-parametric model; until you often see this object, the brain will transfer it to the parametric model; if you do not meet for a long time, then The object will be moved back to the non-parametric model.

This is probably the rules described by the cartoon "The Secret Agents."

This rule is precisely linked to the human learning model.

For example, parents just taught their children "horses." At this time, "the horse" entered the non-parametric model of his brain until he one day arrived at the zoo and observed a new "horse". Accumulation of these samples to a certain extent, will make the "horse" into the parametric model.

This cognitive model has great significance for improving the structure of machine learning. The machine's unsupervised learning and self-learning seem to reveal a glimmer of light. However, Yan Shuicheng said that human learning is far from simple because people's learning is not only based on images, but also combines sound and semantics. In these areas, the research gap is very large.

6, semantic understandingFor AI, the four most important directions are: vision, speech, semantics, and big data.

At present, scientists have realized more usable artificial intelligence in terms of visual, speech, and big data. Only stand still in the most important and perceptible aspect of “semantic understandingâ€.

This is why all current artificial intelligence robots feel that they have some "nonsense."

The reason is still mentioned before: Human's existing semantic understanding implementation technology and human brain's working architecture are completely different. People's understanding of semantics is not only based on the other party's discourse itself, but also considers factors such as discourse environment, knowledge background, emotion, and so on. None of these factors can currently be well controlled by human scientists.

Yan Shuicheng admitted that research in this area has been very difficult and has exceeded his ability. But it is also focused on their own visual and big data research areas in order to concentrate on making more achievements.

AI, these two letters are full of mysterious and romantic atmosphere. This represents not only our longing for the unknown, but also the expectation for creation, and our supreme devotion to our own wisdom.

Every insight in the field of artificial intelligence can bring us closer to the ultimate answer.

This makes us happy.

Yan Shuicheng , Chief Scientist of 360, Dean of 360 Institute of Artificial Intelligence. He once led the Machine Learning and Computer Vision Laboratory at the National University of Singapore. Yan Shuicheng's main research areas are computer vision, deep learning, and multimedia analysis. The "Network in Network" put forward by his team has given a great impetus to deep learning. His team has won the world championship and runner-up award for PASCAL VOC and ILSVRC in the "World Cup" competition in the field of computer vision seven times in five years.

Lcd Tonch Screen For Iphone 6,Lcd Display For Iphone 6Sp,Mobile Lcd For Iphone 6Sp,Lcd Touch Screen For Iphone 6S

Shenzhen Xiangying touch photoelectric co., ltd. , https://www.starstp.com