On September 17, 2018, Wuhan Huanyu Zhixing released the Athena (ATHENA) autonomous driving software system in Shanghai, which aims to provide high-performance AI calculations and algorithms to perfectly solve the applicability and reliability of perception and decision-making.

This system integrates multiple functions such as high-precision maps, image recognition, decision-making control, and path planning. It also includes virtual simulation technology to provide technical support for the upgrade and testing of autonomous driving technology.

Virtual simulation technology

In the recognition of images, the autonomous driving system needs to distinguish different objects through visual perception, such as apples, oranges, zebras, mountains, buildings, etc. The traditional method is to continuously train the sample library through deep learning. Realize the improvement of cognitive ability.

This method is very dependent on the perfection of the sample library, but the sample library actually comes from some well-known data sets, which can provide the shape, color, etc. of objects in most scenes, but it does not cover the rare objects. A deep learning model trained based on an incomplete sample library will naturally compromise the cognitive ability.

But autonomous driving cannot be discounted.

ATHENA system has a set of virtual simulation system, which can realize object deformation, scene change, and the color and background environment of the same object can be adjusted by parameters. In this way, it is possible to train to the original object recognition without collecting a large number of data samples.

To achieve mass production deployment of self-driving cars, a solution that can be tested and verified in billions of kilometers of driving is needed to ensure due safety and reliability. Therefore, in addition to the virtual simulation of the image database, the system can also simulate different road environments, obstacles, vehicle power parameters, and so on.

The system has the capabilities of HIL (Hardware-in-the-Loop), SIL (Software in loop), VIL (Vehicle-in-the-Loop), Test track, and Public road .

Considering safety, feasibility, and reasonable cost, hardware-in-the-loop testing has become a very important part of the ECU development process, reducing the number of road tests on vehicles, shortening development time and reducing costs while improving ECU software Quality, reduce the risk of automobile factories.

Carrying out real embedded control system testing on cars is often very complicated, expensive and very dangerous. HIL simulation allows engineers to efficiently and comprehensively test embedded devices in a virtual environment.

SIL is an equivalence test. The purpose of the test is to verify whether the code and the control model are completely consistent in all functions. The basic principle is generally to use the same test case input as MIL, compare the test output of MIL with the test output of SIL, and examine whether the deviation between the two is within an acceptable range.

VIL refers to the method of integrating the system into a real vehicle and simulating roads, traffic scenes and sensor signals through real-time simulators and simulation software to form a complete test environment, which can realize system function verification, simulation testing of various scenarios, and vehicle Matching and integration testing of related electronic control systems.

Compared with traditional hardware-in-the-loop (HIL) testing, VIL has largely improved the accuracy of the performance test results of the controller under test because it replaces the vehicle model with a real vehicle; The realization of complex and difficult-to-reproduce traffic scenes is realized by simulation, which can quickly establish various test conditions. The repeatability of the conditions makes it possible to quickly iterate the development of system algorithms. In short, VIL bridges the gap between actual vehicle testing and HIL testing.

Environmental awareness

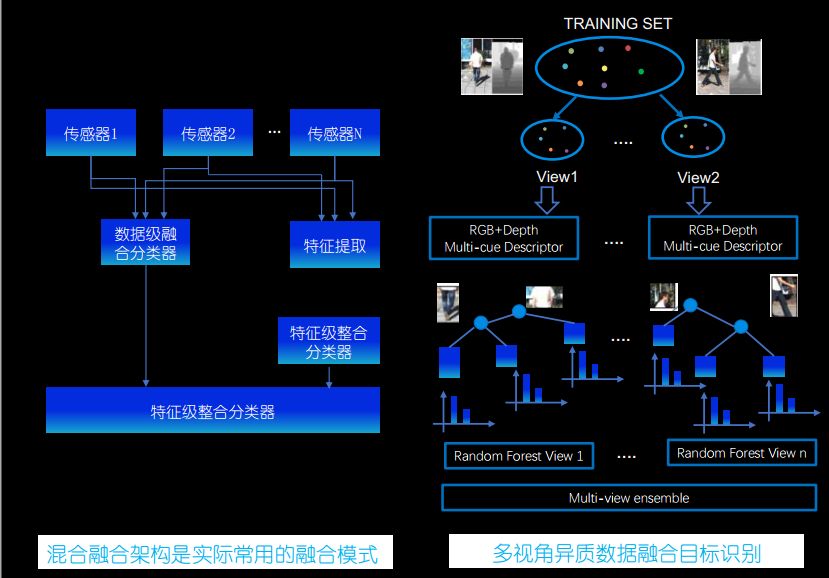

Xie Xing, head of technology at Huanyu Zhixing, believes that the fusion of 3D perception and heterogeneous data is the direction of high-level autonomous driving complex perception.

Traditional environmental perception is a 2D perspective, and the recognition of objects is not comprehensive enough. If you want to fully recognize the object, you need to view the state and shape of the object from different directions.

Therefore, in the future, the direction of object recognition must be towards the 3D direction, obtaining the data of the object from different angles, so as to construct the true appearance of the actual shape of the object.

The process from 2D to 3D requires three-dimensional point cloud data, but the current point cloud data has some characteristics:

1. Disorderly, the data is difficult to process directly through the End2End model;

2. Sparsity, only about 3% of pixels have corresponding radar points. The extremely strong sparsity makes high-level semantic perception of point clouds particularly difficult;

3. The amount of information is limited, and the essence is low-resolution resampling of the geometric shapes of the three-dimensional world.

Three-dimensional point cloud deep learning models include VoxelNet and VoxelNet++ based on body velocity networks, multi-scale fusion, PointNet and PointNet++ based on point clouds, and graph-based learning models.

Autonomous driving environment perception also relies on deep learning heterogeneous data feature representation, big data-oriented feature selection, and intelligent driving environment perception.

Among them, feature selection for big data requires a search strategy to generate candidate feature subsets, and each feature subset is screened by a certain software algorithm. Then autonomous driving forms an MLP network by selecting a subset of features to achieve classification and positioning, and finally get the result.

The entire process of perception relies heavily on deep learning models and convolutional neural networks to refine the abstract \ non-linear environment into multi-layer \ deep feature elements. The learning mode of Huanyu Zhixing is a voxel deep network model, including RPN (RegionProposal Network) regional generation network, Convtion Middle Layers, Feature Learning Network.

Decision planning, service application

After the autonomous driving system has the ability to sense the environment and high-precision positioning, it also needs to make decisions and plans. In this respect, the company is realized through three aspects: Mission-level Planning, Behavior-level Planning, and Trajectory-level Planning.

The Athena software system provides mature solutions in perception software algorithms, decision planning, data, and map simulation, and can provide technical assistance to autonomous driving companies and OEMs. Customers can obtain simulation results or corresponding test reports by providing data.

The company can also provide various API interfaces and SDKs to users, allowing users to implement rich and customized applications.

Perkins 201-400KW Diesel Generator

201-400KW Diesel Generator,Perkins Soundproof Generator,Perkins Super Silent Type Diesel Generator,Perkins Super Silent Power Generator

Shanghai Kosta Electric Co., Ltd. , https://www.ksdgenerator.com